22 Sep

If you are involved in the Software Development Life Cycle (SDLC), you are likely to have come across, or even performed, a measure of performance testing.

When done well, performance testing routinely detects performance issues before releasing new application or infrastructure changes in a production environment, preventing costly rollbacks or negative business impact.

Yet, despite the huge improvements in application and services performance that it can yield, and despite performance testing being a well-established IT discipline, as a process it is often misunderstood, and overlooked, by many software and devops professionals.

Since its inception, Moviri has developed a core expertise and body of work in improving the performance of our client’s applications. In more than 20 years in the business, we have witnessed our fair share of bizarre situations and encountered plenty of confusion on the topic. This is why we have decided to write an introductory guide to help you select your performance testing platform.

This guide is divided into two articles. In the first one, we talk about when and where it’s best to perform performance tests and why your company needs a performance testing platform. In the second one, we talk about all the key considerations you need to take into account before selecting a performance testing platform.

Performance tests ≠ Functional tests

Before we start, we want to clarify something: performance tests are not functional tests. If functional tests must assure that an application does what it is supposed to, performance tests must assure how it does it.

In fact, performance tests complement functional tests, in that they are designed to address a plethora of non-functional requirements that must be checked, and validated, to avoid a potentially catastrophic crash in a production environment. When incidents like this happen, engineers and managers are forced to scramble and spend all day on the phone, sometimes during their well-deserved vacations!

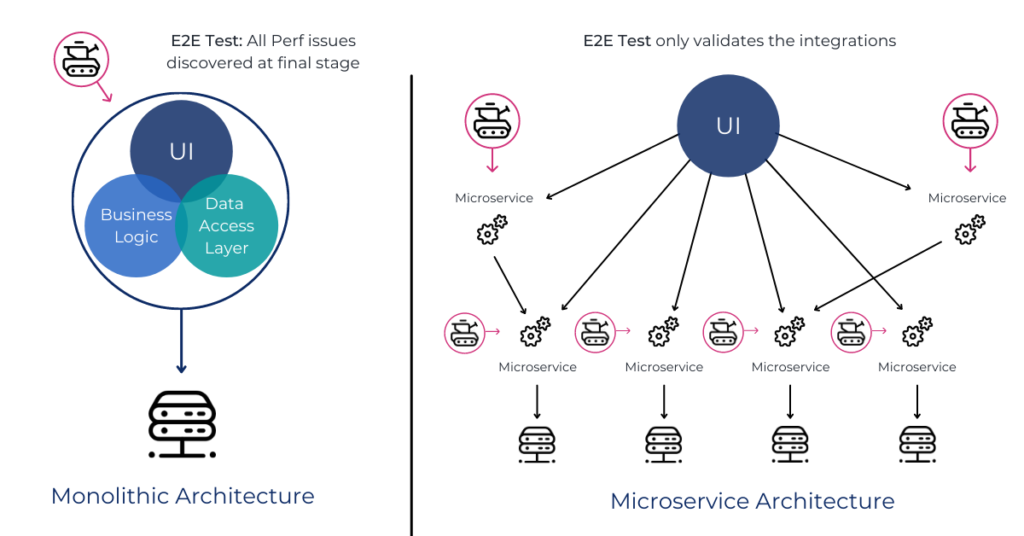

Until just a few years ago, only the Release Candidate — i.e. the final version of the application, supposedly ready to go into production — underwent performance testing.

With the acceleration of SDLCs and new continuous delivery approaches becoming the norm, nowadays, the Release Candidate is often completed just days before the go-live date. This means that, increasingly, there is a rush to complete development. Another option is to start testing before all the changes are ready to be released.

Either way, this approach has numerous downsides:

- Several bugs are almost invariably introduced in the last few days;

- Integration issues emerge that are due to the application never having been tested before in the proper architectural context;

- Tests are executed on an unstable, or not yet final, version of the application, which leads to unreliable results;

- When do bugs appear that impact performance, there is little time to address issues before the go-live.

Fortunately, the evolution of software development and the introduction of modern architectures that rely on the separation of application components play in our favor. We can now test small components more easily than ever, before merging all the services in the final application.

When you should do performance test?

There are different strategies, but what we propose is a Performance by Design approach. In this approach, we start by performance testing a single small component. For example, a single small component can be a single microservice, a direct injection o a queue, or a specific API.

As components are developed, we proceed with more performance tests. This way, we will find macro issues early in the SDLC, and build incrementally on the learnings piece by piece.

Finding small issues in the early stages of the lifecycle is much more cost-effective than trying to resolve issues the day before the release. This doesn’t mean that we can forget about the final test of the complete Release Candidate. In most cases, it is still the final and best validation of the software before rollout. But the Performance by Design approach streamlines the process and ensures that application performance is optimized and risks mitigated.

Where you should do performance test?

Having a testing environment that is a perfect replica of the production environment, with the new Release Candidate running on it, is one of the best testing options, unsurprisingly. Alas, this is not a scenario that is often available.

So why not exploit the flexibility and immediacy provided by cloud services and container architectures? Of course, it’s always possible to scale and test in a smaller environment, but there are many assumptions and extrapolations to be made. We have to be sure that the test environment is as near an identical copy of the production environment as possible, at least with respect to the component types and software versions.

For example, it makes no sense to have solid-state disks in production, while the test environment comes with traditional hard drives. This applies to all other aspects involved in the application stack. The more the environments are similar, the better will be our final assumption on the real capacity. Otherwise, the risk is to make wrong assumptions in extrapolating scalability.

So, why do you need a performance testing platform?

In our experience, we have often come across operations and development teams that were running “performance tests” manually, launching a very limited set of calls, and sometimes even without any concurrence. In many cases, tests are launched with a black-box approach, without even monitoring basic metrics on the hosts.

For performance testing to be effective, testing must be reproduced multiple times under the same conditions. Manual performance tests, and those designed without the support of a good testing tool, lack consistency and reliability.

A proper performance testing platform should:

- Automate test execution.

- Simulate hundreds or thousands of concurrent virtual users.

- Model loads under a variety of scenarios, according to the real data extracted from production environments.

- Test different scenarios in a matter of few clicks, providing the ability to execute:

- Load tests

- Stress tests

- Soak tests

- Break tests

- and more…

- Allow deep and complete test analysis by integrating with external monitoring sources.

- Reproduce the same test again and again with identical configurations to check for possible regressions between releases.

- Scale horizontally, when there is a need to inject more load.

- Provide a rich and intuitive data visualization tools to analyze the results.

Now you know that you need Performance Tests, you know where and when is best to execute them, but what are the key points to consider in choosing the tool that best fits your need? Stay tuned for the second part, where we will pinpoint the main aspects you have to keep in mind!

Categories

- Akamas

- Analytics

- Announcements

- Arduino

- Big Data

- Capacity Management

- Cleafy

- Cloud

- Conferences

- ContentWise

- Corporate

- Cybersecurity

- Data Science

- Digital Optimization

- Digital Performance Management

- Fashion

- IoT

- IT Governance and Strategy

- IT Operations Management

- Life at Moviri

- Machine Learning

- Moviri

- News

- Operational Intelligence

- Partners

- Performance Engineering

- Performance Optimization

- Tech Tips

- Virtualization

Stay up to date

© 2022 Moviri S.p.A.

Via Schiaffino 11

20158 Milano, Italy

P. IVA IT13187610152