Conferences, Moviri, Performance Optimization

KubeCon Europe 2022 – all about Kubernetes, autoscalers, and performance optimization

31 May

How I ended up speaking at KubeCon & CloudNativeCon Europe 2022 and what I learned in the process.

Earlier this month, from May 16 to May 20, 2022, we traveled to Valencia to attend KubeCon and CloudNativeCon Europe 2022, an event that is quickly becoming a point of reference for the enterprise open source community and software vendors.

KubeCon and its side events have grown tremendously over the last few years, somewhat bucking the pandemic trend that substantially disrupted many tech events and conferences, with many shrinking IRL audiences and virtual participants clearly fatigued by two years of webinars and live streams.

Today, Kubecon & CloudNativeCon is the flagship conference of the Cloud Native Community Foundation (CNCF), gathering adopters and technologists from leading open source and cloud-native communities, and its agenda is chock full of valuable content and insights.

Moviri, as experts in many of the technologies that underpin the open-source and cloud-native revolutions, in addition to participating, also submitted a conference talk proposal. Fierce competition notwithstanding, our proposal was accepted. Almost immediately upon receiving the news, we booked tickets to Valencia and I started working on my presentation: “Getting the optimal service efficiency that autoscalers won’t give you”.

This year’s four-day event was packed with interesting sessions, fun things to do, and unexpected things to see. There were more than 100 sessions – technical sessions, deep-dives, case studies, keynotes, training – from which to choose. In fact, we had to plan very carefully our days to maximize our Kubecon experience and learning opportunity. We could not miss finding out how to build a nodeless Kubernetes platform, scale Kubernetes clusters or… perform chair yoga!

Speaking at KubeCon: my talk on Kubernetes AI-powered optimization

On May 18, I got the opportunity to speak in front of a room full of people that wanted to discover more about Kubernetes autoscalers and how to get to the optimal system configuration.

First of all, I must admit that I was a bit nervous. It was my first time speaking at KubeCon and I wanted everything to go smoothly. There is of course no substitute for preparation. Repetition is essential, not only to improve the content iteratively, but also to grow confidence in your ability to speak in front of a large audience. In the weeks leading to KubeCon, my teammates helped me a lot, by checking my slides, listening to my rehearsals, and providing feedback that I incorporated into my material and my delivery.

The presentation title I settled on was: “Getting the optimal service efficiency that autoscalers won’t give you”. I chose this title because my presentation focused on the fact that often the “best” configurations, i.e. the ones that guarantee the best trade-off between service cost and performance, can’t simply be provided by autoscalers!

When tuning a microservices application in Kubernetes, a key challenge is properly sizing containers (i.e. CPU and memory), as application changes and traffic fluctuations constantly modify the conditions impacting performance. Kubernetes autoscalers are the standard solution to automatically adjust container resources for service efficiency.

The problem with today’s applications is their constant evolution. Cloud-native applications have dozens or hundreds of microservices, spanning over a wide set of technologies. And, of course, all these technologies have hundreds of tunable parameters. The result? Finding the best configuration for our application among hundreds of thousands of possible configurations is humanly impossible.

Kubernetes is no exception. It is well known that Kubernetes resource management is somewhat complex, and manually tuning Kubernetes microservices applications is a challenge even for the most experienced performance engineers and SREs. Tuning only one microservice could take up to several weeks if done manually. Not to mention the so-called Kubernetes failure stories that we all heard at least once.

To understand the solution, we first must go back to Kubernetes fundamentals and see how its resource management works. This way we can comprehend the main parameters that impact Kubernetes application performance, stability, and cost-efficiency.

There are six main key factors:

- Setting proper pod resource requests is critical to ensure Kubernetes’ cost-efficiency.

- Resource limits strongly impact application performance and stability.

- The less known effect of CPU limits is that they may disrupt service performance, even at low CPU usage.

- Getting rid of CPU limits is not a good idea, setting resource requests and limits is very important to ensure Kubernetes stability.

- Kubernetes Vertical Pod Autoscalers (VPA) do not address service reliability.

- Kubernetes Horizontal Pod Autoscalers may multiply inefficiency.

How does all this translate into the real world?

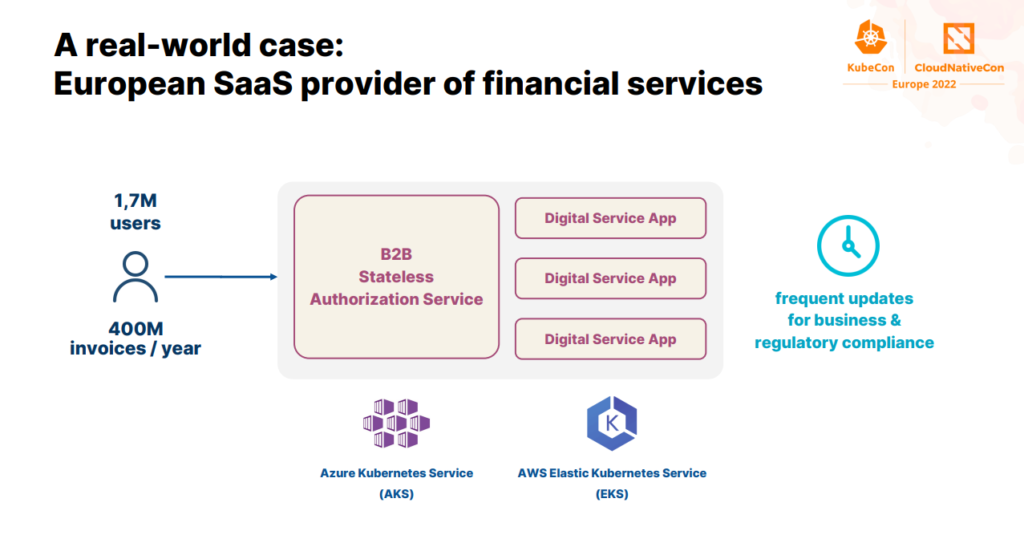

A real-world case: SaaS provider of financial services

In my presentation, I included the results of an extensive tuning campaign Moviri conducted on a real application running on Kubernetes. We used AI to automate the optimization process of a transaction-heavy application by a leading SaaS financial services provider, with the objective of minimizing cloud cost without compromising performance.

The optimization target we used was a Java-based microservice running on Azure Kubernetes Services, which is in charge of providing the B2B authorization service that all digital services use.

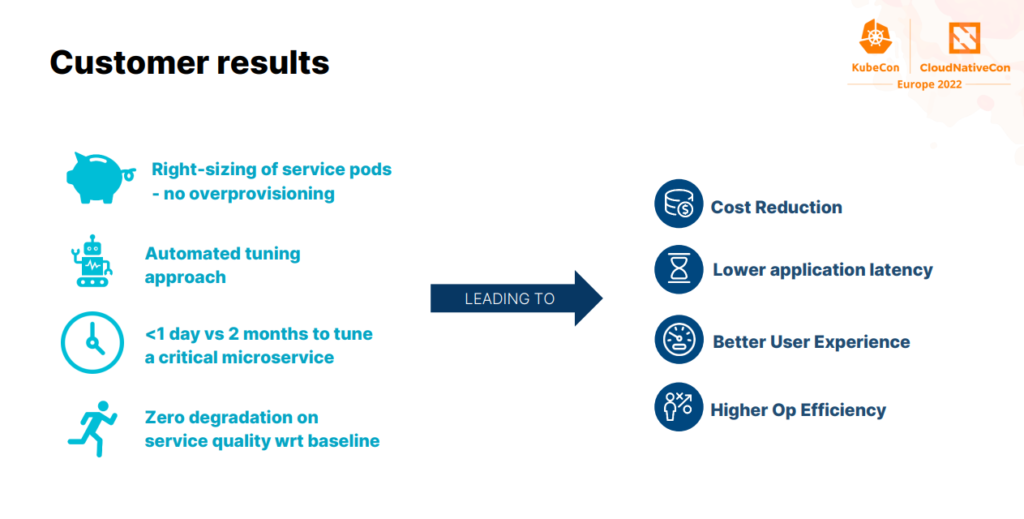

The first thing we did was a load test with the initial configuration of the microservice and by replicating a diurnal traffic pattern, where we discovered an unwanted behavior that was caused by having configured the JVM max heap higher than the memory request. Then we started the AI-powered optimization process, by setting up an optimization goal: minimizing the overall cost of the service running on Azure cloud.

In this study, we considered nine tunable parameters: four Kubernetes and five JVM parameters. All of them were tuned simultaneously to make sure that the JVM was optimally configured to run in the Kubernetes pod. We found the best configuration of the goal given after a few experiments, in less than a day. The configuration provided a 49% improvement on the bill, thus being beneficial even in terms of resilience and performance.

During the optimization study, we found other configurations like the one that reduced costs by 16% (our goal) and displayed a higher improvement in resilience. There was a lower response time peak upon scaling out and the replicas scaled back after the high load phase.

All this was possible thanks to the Akamas AI-powered optimization platform, which we used specifically to conduct this tuning campaign. Akamas was founded as a spinoff of the performance engineering team at Moviri and is now an independent company with which we have a strategic partnership. If you are interested in discovering more about the specifics, you can check out their case study.

So, what is the key takeaway from my speech? You always have to tune your pods, since K8s won’t do it for you. And the best way to do it is to leverage an AI that could allow you to deal with the complexity of modern applications.

The best KubeCon 2022 talks about Kubernetes resources optimization

As I said, the event was packed with interesting and insightful sessions and I was as much a speaker as an attendee, eager to take it all in and learn. For example, Mercedes-Benz showed how they migrated 700 Kubernetes clusters to cluster API with zero downtime, Spotify discussed how Backstage has become the core of their developer experience. Netflix talked about how to make Spark, Kubeflow, and Kubernetes work with ML platforms, while Intel explained how to make smart scheduling decisions for your workloads. Google demonstrated how to build a nodeless Kubernetes platform and how to use Kubernetes to improve GPU utilization.

Of course, I wasn’t the only one speaking about Kubernetes resource management or how to use AI and machine learning to optimize the performance of container-based applications. In my opinion, three talks stood out that analyzed the same problem (Kubernetes resource management and autoscalers).

Autoscaling Kubernetes deployments: a (mostly) practical guide with New Relic (Pixie team)

The first talk that I’d like to single out was from Natalie Serrino, Software Engineering at New Relic (Pixie team), and provided a practical guide on how to autoscale Kubernetes deployments.

Natalie started by explaining why autoscaling is useful, which are the different types of Kubernetes autoscalers (Cluster, Vertical pod, Horizontal pod), and their main characteristics. She talked about four methods of doing resource sizing in Kubernetes: random guess, copypasta, proactive iteration, and reactive iteration. The method I presented during my speech is, in fact, a proactive iteration. Hearing somebody else acknowledge our approach gave me chills.

She also presented a demo on autoscaling applications by using Prometheus, Linkerd, and Pixie. With the help of the demo, Natalie answered some questions like how to set the minimum and the maximum number of replicas or how often to look for changes in the metric. She explained that there isn’t a metric on which you always need to scale. Instead, you should choose the metric to scale on based on your workload. In the end, she presented an “impractical demo” of a turing-complete autoscaler.

How Adobe is optimizing resource usage in Kubernetes by Adobe

We all know the possibilities, the flexibility, and all of the benefits that the migration to Kubernetes may offer. We also understand the challenges that come with this migration. Carlos Sanchez, Cloud Engineer at Adobe, explained how they extensively use standard Kubernetes capabilities to reduce resource usage and how they have built solutions at several levels of the stack in order to improve it. They experimented with several features to reduce the number of clusters they need and, of course, the costs of the bill.

Carlos started by explaining what Adobe Experience Manager (AEM) is, its characteristics, and how it runs on Kubernetes. Since AEM is a Java application I was even more curious about their findings and the solutions they have implemented. He showed how all types of Kubernetes autoscalers work in AEM and showed a few strategies they have in place to manage the resources, such as hibernation and Automatic Resources Configuration (ARC).

He concluded by saying that a combination of cluster autoscaler, VPA, HPA, hibernation, and ARC, both at the application and infrastructure level, will help organizations to optimize and reduce the resources used.

How Lombard Odier deployed VPA to increase resource usage efficiency

The last speech that I think was particularly interesting and focused on similar topics as mine, was from Vincent Sevel, Architect / Platform Ops at Lombard Odier. His company has been a private bank in Switzerland since 1796 and its main businesses are private clients, asset management, and technology for banking. Their banking platform is a modular service-oriented solution, with approximately 800 application components. A large modernization initiative, both functional and technical, started in 2020.

The goals of Lombard Odier were to optimize placement of pods in a Kubernetes cluster, tune resources on worker nodes, size optimally the underlying hardware, avoid waste, save money, and all this without sacrificing behavior. Definitely complex.

Vincent explained how their application was made, the clusters involved, and showed few use cases. Even though they didn’t finish testing everything and analyzing all their data, they already learned some intriguing lessons. For example, they’ve been surprised by the low CPU recommendation of the VPA and they faced the same JVM startup issues we analyzed in our talk.

In Moviri, Performance Engineering is in our blood, with more than 20 years of expertise in this domain. We continue to improve our skills every day while providing services and solutions for companies across the globe. Contact us if you want to discover how our experts can help get better performance from your Kubernetes applications.

If you want to ask me something about my presentation at KubeCon, you can contact me on LinkedIn or drop me an email here 😉

Categories

- Akamas

- Analytics

- Announcements

- Arduino

- Big Data

- Capacity Management

- Cleafy

- Cloud

- Conferences

- ContentWise

- Corporate

- Cybersecurity

- Data Science

- Digital Optimization

- Digital Performance Management

- Fashion

- IoT

- IT Governance and Strategy

- IT Operations Management

- Life at Moviri

- Machine Learning

- Moviri

- News

- Operational Intelligence

- Partners

- Performance Engineering

- Performance Optimization

- Tech Tips

- Virtualization

Stay up to date

© 2022 Moviri S.p.A.

Via Schiaffino 11

20158 Milano, Italy

P. IVA IT13187610152