03 Apr

ContentWise and Harmonic Collaborate on AI-Programmable Linear Channel Personalization

Media brands and content providers automatically repurpose content libraries into custom FAST channels.

23 Jan

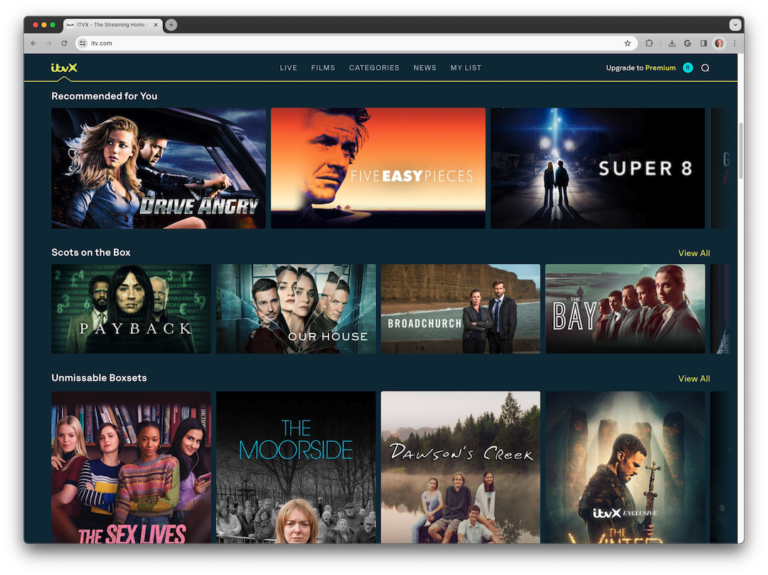

ITV Selects ContentWise to Power ITVX Advanced Recommendations and UX Personalization

ContentWise UX Engine was launched in production on ITVX in November 2023, generating double digit increases in conversion rates and substantial lift in average watch time.

23 Jan

Akamas enhances its cloud optimization capabilities with HPA, OpenShift, Kubernetes, OAuth2 support ...

Akamas 3.4.0 is our biggest release to date, and incorporates a wealth of improvements that were highly requested from our customers.

22 Jan

Join us in the countdown for Arduino Days 2024

Now in its 11th year, this is the event that brings together all Arduino users and shines the spotlight on the most outstanding projects and ideas

13 Dec

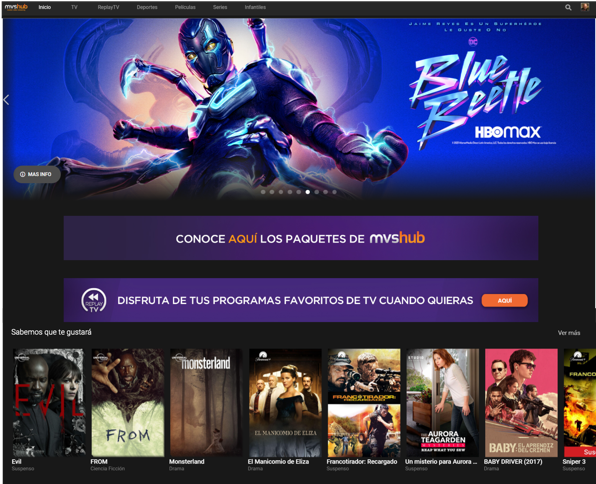

Dish Mexico and SES Select ContentWise and Minerva for mvshub UX

Minerva’s and ContentWise’s software at the core of the mvshub super aggregation platform based on SES’s Online Video Platform (OVP), allows Dish Mexico to a personalized next-generation viewing experience.

22 Nov

Insights from Kubecon 2023 – K8s for GenAI, Efficiency, and Sustainability

While K8s offers excellent capabilities, realizing its full efficiency and scalability benefits requires proactive tuning.

15 Sep

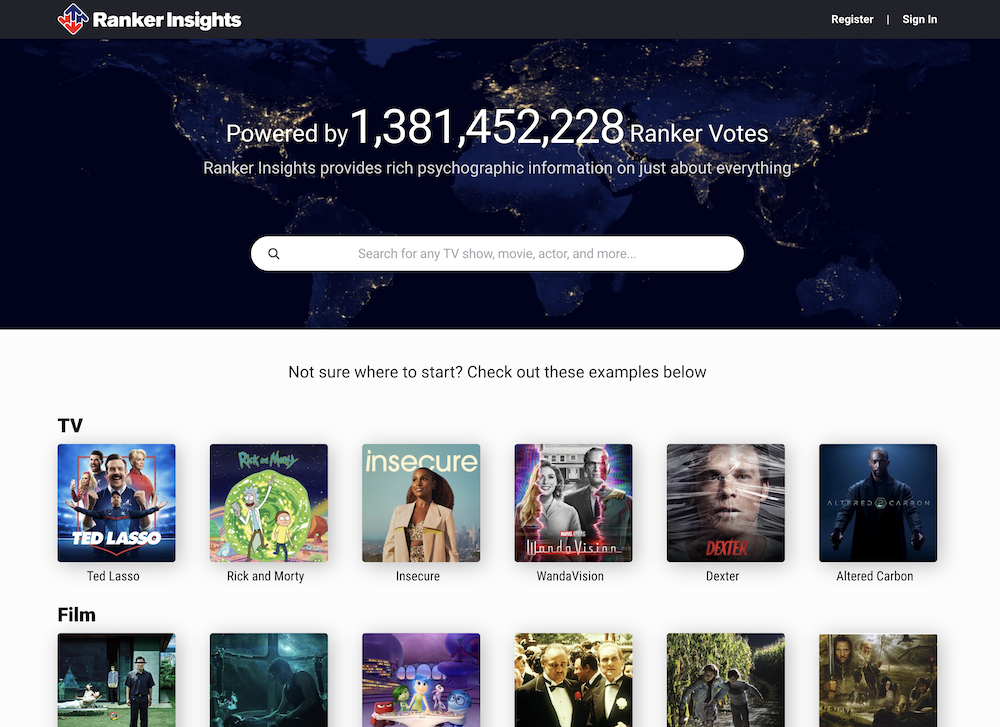

ContentWise and Ranker Deliver Personalization Powered by 1+ Billion Fan Votes

The integration of Ranker Insights and UX Engine enables operators to leverage the power of fan-based rankings and collective consumer opinion directly on their platforms.

14 Sep

Like VOD but in four dimensions? FAST channel programming with Contentwise Playlist Creator

Thanks to automation and AI, Playlist Creator, leverages content recommendation recipes to generate FAST channel playlists that are fed to cloud playout systems.

13 Sep

From Seed to Success: Moviri’s Story of Investing and Innovating with Cleafy

Our patient investment in Cleafy embodies our mission: to identify, invest, and nurture technology-driven solutions to enterprise problems.

12 Sep

Cleafy Secures €10M in Funding Led by United Ventures to Foster a Secure Digital Banking Ecosystem

The investment round, led by United Ventures through the fund UV T-Growth, will support Cleafy's expansion into new markets and further development of its technology platform.